Who benefits from captioning?

The obvious answer is people who are Deaf or hard-of-hearing. However, captions can benefit people with auditory processing disorders, people with autism, people for whom English is a second language, etc. During the course of the pandemic, we discovered that captioning can benefit some people who are deafblind, opening up a whole new world of accessible virtual events to a marginalized group of people who have often experienced isolation.

Why would I need an interpreter AND captioner?

American Sign Language interpreters are the right accommodation for individuals whose first or preferred language is ASL. However, not all persons with hearing loss know sign language. Late-deafened individuals or those who learned to sign later in life may not be able to follow ASL interpreting. And some people who use ASL may need or prefer to have the content translated into their native language, ASL, rather than read English, which may NOT be their first language. It is always best practice to ask what the best accommodation is for a person and not to assume.

Can’t I just turn on the auto captions?

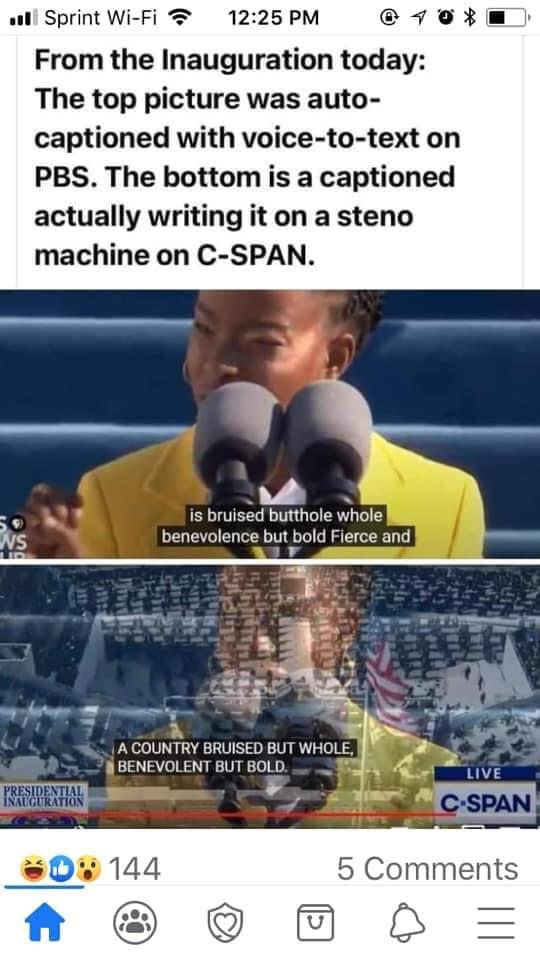

Automated speech recognition (ASR) has improved by leaps and bounds in the past several years. However, nothing can replace the human brain. Good captioning involves judgement and thinking. In the captioning field, we like to say that live captioners are “better than verbatim,” because we can make decisions on the fly. If the audio is breaking up or muffled, we can indicate that so that the caption consumers know what’s going on. If a dog barks in the background or there’s a loud crack of thunder and everyone suddenly looks out the window, we can indicate that as well, so the consumer isn’t left out or wondering what everyone is looking at. We can indicate when a comment was said sarcastically, so a joke is clear instead of potentially misunderstood.

Auto captioning often leaves out punctuation that can make reading the captions more exhausting, because it’s more work on the brain to make sense of. ASR also often butchers names. Here’s a real example: The name Director Locasio or Dr. Locascio was rendered by auto captioning at different times in the course of one meeting as “Dracula Casio,” “Dr. Outlook because EO,” and “Dr. look cozy zero.”

This is a a crude but real example of auto captions versus a live captioner, from the 2020 Presidential Inauguration:

Who pays for the captioning or interpreting services?

Under the Americans with Disabilities Act (ADA), Rehabilitation Act, and the Individuals with Disabilities Education Act (IDEA), captioning and/or ASL interpreting are considered reasonable accommodations. Any public entity offering services to the public must accommodate people with disabilities, upon request. The cost of the service is to be covered by the entity that is putting on the event, unless they can show the Court that the expense of providing the service would be an undue burden. The ADA applies to any public facility, with few exceptions. https://www.ada.gov/